AI Agents for Large Codebases

Not so long ago, the tech sphere spotlight was on low-code platforms like ReTool, Refine, and Appsmith, with discussions about the potential for them to supersede software developers. However, the focus has recently shifted to AI agents, with many low-code tools rebranding themselves as AI builders or whatever catchy phrase the marketing chaps might conjure up next.

In the realm of toy projects and internal tooling, current AI agents have demonstrated considerable capabilities, delivering substantial value in a remarkably short span. For software applications that are not directly exposed to hackers and do not require complex authentication and authorization workflows, AI agents can be a boon.

When it comes to large codebases, the narrative is somewhat different. Enterprise software typically exhibits a level of complexity that surpasses the capabilities of AI agents. Even with models boasting colossal context windows, the very act of providing pertinent and comprehensive context presents a gargantuan challenge.

The Current State of the Art

In the previous post, which would certainly be worth a read if you're interested in the current state of AI agency, we conducted a comparison of popular AI agent interfaces and derived several key observations:

- The primary emphasis ought to be on a thorough analysis of the codebase, offering a visual representation of the logical flow before any code modifications are implemented;

- User interfaces should incorporate a "continue" function, thereby preventing the model from losing its line of reasoning;

- Upon completion of a task, the UI should prompt the model to perform a detailed self-assessment;

- The actual commercial viability of these tools remains an open question.

The previous article, which featured real-world tests, revealed that popular tools are not yet fit for the task. The limited success they achieved can be attributed to the quality of Claude Sonnet as a "coder model", and the popular AI agent interfaces have not made significant strides in addressing the context problem. Or have they?

Enters Augment

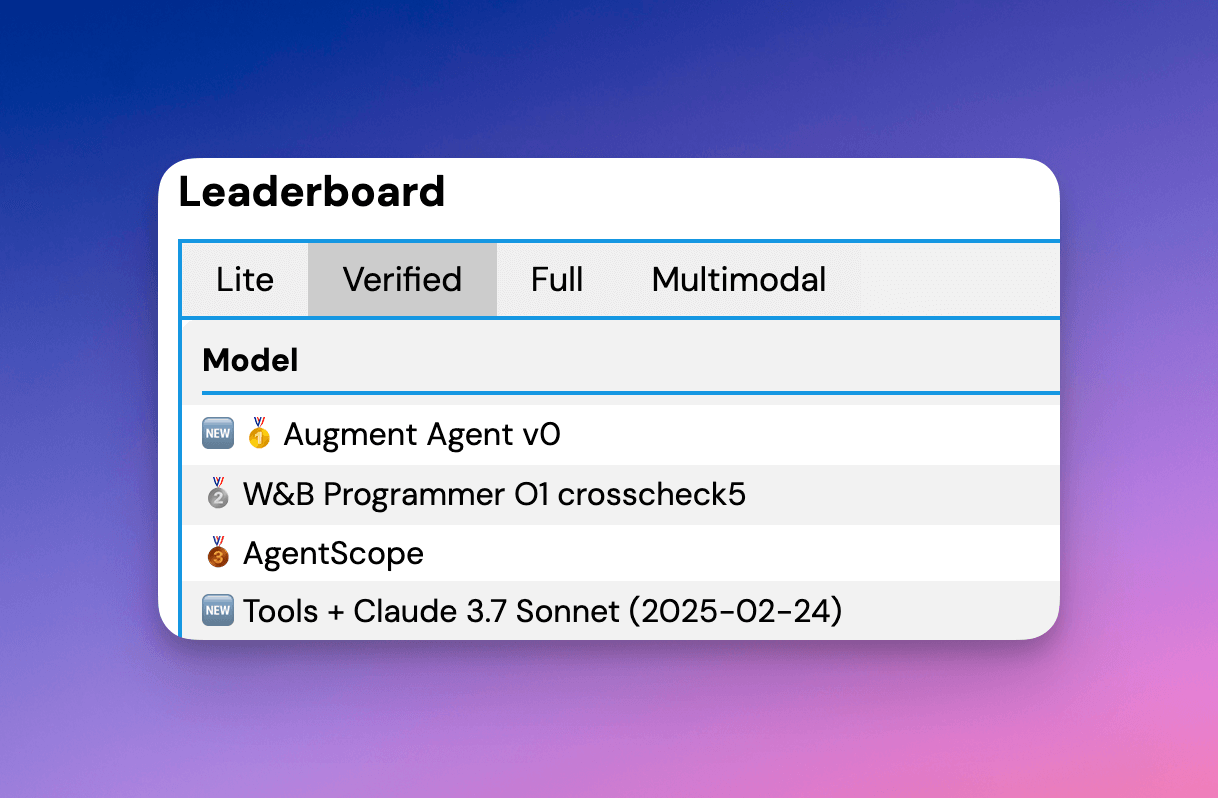

During a recent review of GitHub issues, I encountered AugmentCode. Their approach stands out for its focus on benchmarking performance on large codebases (SWE-Bench Verified) and their positioning as an AI agent specifically for engineers working with substantial codebases.

The SWE-bench call to action on their website links to an article on their blog, which offers some intriguing and bold perspectives. Here are some highlights that resonated with me:

- "In a world where vendors are investing heavily in UX and offering a long list of models, our main bet is on context — not a dropdown" - AI model pickers are a design failure, not a feature;

- "Where our competitor’s AIs are capable of “0 to 1” starter projects, Augment shines supporting teams of software engineers looking after larger, more complex codebases" - To fork or not to fork?;

- "Contextually relevant, and genuinely helpful requires a deep understanding of both developer intent and the full codebase in which they are operating." and "RLDB is designed to capture valuable signals directly from the actions of software developers in the course of their normal work." - Reinforcement Learning from Developer Behaviors;

- "While you code, it's scanning your codebase, identifying dependent files, and generating contextual suggestions that keep your code in sync." - Introducing Next Edit;

- "We remain convinced that making quality software far more affordable and predictable will increase overall demand (following Jevon’s paradox)" - 6 predictions for AI in 2025.

It's no wonder their first post announced raising $227 million and achieving SOC2 Type II certification. The statements I extracted from their blog posts clearly indicate that they are taking a different approach than the rest of the industry.

While mainstream media coverage has been limited, Augment's approach warrants closer examination, particularly for developers working with complex projects.

In particular, their "Reinforcement Learning from Developer Behavior" (RLDB) technology has sparked my interest. The post about RLDB touches on how it works, mentioning the scanning of signals as developers work on their projects. This suggests that their technology not only scans the entire codebase but also takes notes on the project as the developer works on it, which is a significant departure from the current state of affairs.

We will be putting Augment to the test in due course. However, before we proceed, let us briefly discuss the recent pricing updates for Serena and Claude Desktop.

Serena and Claude Desktop Pricing Update

Initially, the intention for this article was to feature a comparison of Serena, an open-source coding agent toolkit leveraging the Language Server Protocol (LSP) to facilitate semantic code retrieval for Large Language Models (LLMs) in conjunction with popular development tools.

Although Serena supports Agno, a model-agnostic agent framework allowing integration with various IDE extensions, its primary aim appears to be that of an MCP server. This would enable users to utilize Claude Desktop for coding tasks, with costs tied to the regular Claude Desktop subscription rather than incurring per-API call charges, according to their README.md:

We got tired of having to pay multiple IDE-based subscriptions [...]. The substantial API costs incurred by tools like Claude Code, Cline, Aider and other API-based tools are similarly unattractive.

I must admit that I was initially skeptical about this approach, as well as any MCP implementation and its practical application thus far. The business model underpinning Claude Desktop did not seem entirely coherent to me, echoing concerns raised in the previous article regarding the viability of the business models of such AI agents.

OpenAI's ChatGPT Pro plan, for instance, was already priced at $200, even before the recent surge in interest in generating Ghibli-style images. In contrast, Claude's Pro (and sole) paid plan was priced at a mere $20, promising to automate a wide array of computer-based tasks.

This raised questions about the underlying infrastructure costs. Consequently, I visited the Claude subreddit to gauge the community's experience regarding its seamless operation.

I was not surprised to find that most topics were about being limited even on the Pro plan ($20) and that the company should announce more extended usage plans. It appears that Anthropic has taken note of these concerns, as they recently announced $100 and $200 plans.

The significant price increase, ranging from 5 to 10 times the initial plan, would be considered ludicrous in any other industry. It is surprising that Anthropic waited so long to update their pricing.

Using Claude Desktop in combination with a MCP for coding is not a straightforward experience. It requires extensive configuration and lacks IDE integration. Essentially, it automates the process of asking the model for a specific piece of code and copy-pasting it into the editor, but with context retrieval via LSP.

While it is possible to combine it with Roo Code, our previous article showed that Roo Code freezes with large prompts and would incur additional API costs. Therefore, testing Serena with Claude Desktop or with Roo Code (via Agno) did not seem like an economically or practically viable decision.

Unless, of course, one is willing to pay $200/month for Claude Desktop to work (in theory) without hiccups, albeit without a proper IDE to work with. This seems like a pricey solution, especially considering the makeshift nature of the setup.

My perspective on this may evolve in the future should I consider running my own model locally or on a rented GPU server. This would afford complete data security and eliminate per-API call charges, despite the initial investment in the server and ongoing maintenance costs. Perhaps by then, Roo Code will have achieved improved handling of substantial prompts?

Real World Tests with Augment

In this ever-evolving landscape of AI tools, a new contender seems to emerge daily. While I fully appreciate that Augment may not represent the absolute pinnacle of AI capability, its top ranking on the SWE-Bench Verified benchmark certainly warrants closer scrutiny than many other tools currently available.

Regrettably, I am unable to replicate the initial test conducted in the first article. However, I can revisit the second and third tasks. It's worth noting that my preliminary testing of Augment for auto-completion and single-file agent tasks over the past few days suggests it would have handled the first task with greater proficiency than any other tool I have previously encountered.

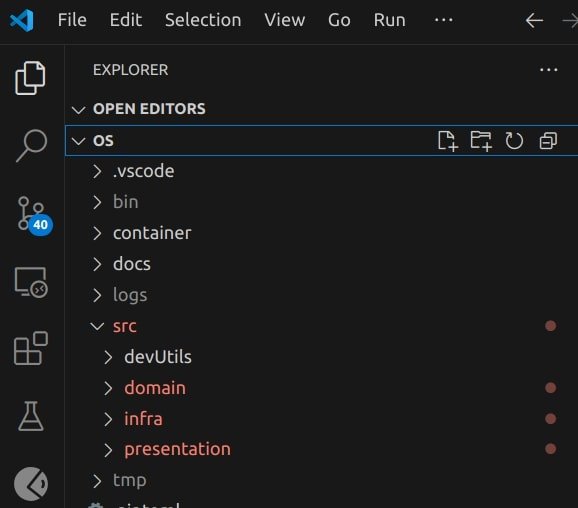

The agent will be working with Infinite OS, an open-source, metamorphic container image that allows users to deploy applications without the need for Dockerfiles, relying solely on user interfaces.

It is strongly advised to read the preceding article before continuing, as I shall refrain from reiterating the task descriptions and instead focus on the observed outcomes.

Task 2: Multi-files Changes

Upon initial project access, Augment undertakes an indexing of the files and attempts to discern the project's nature, subsequently providing potentially helpful queries. Intriguingly, it also reads your git configuration to ascertain your name and email – a rather thoughtful touch.

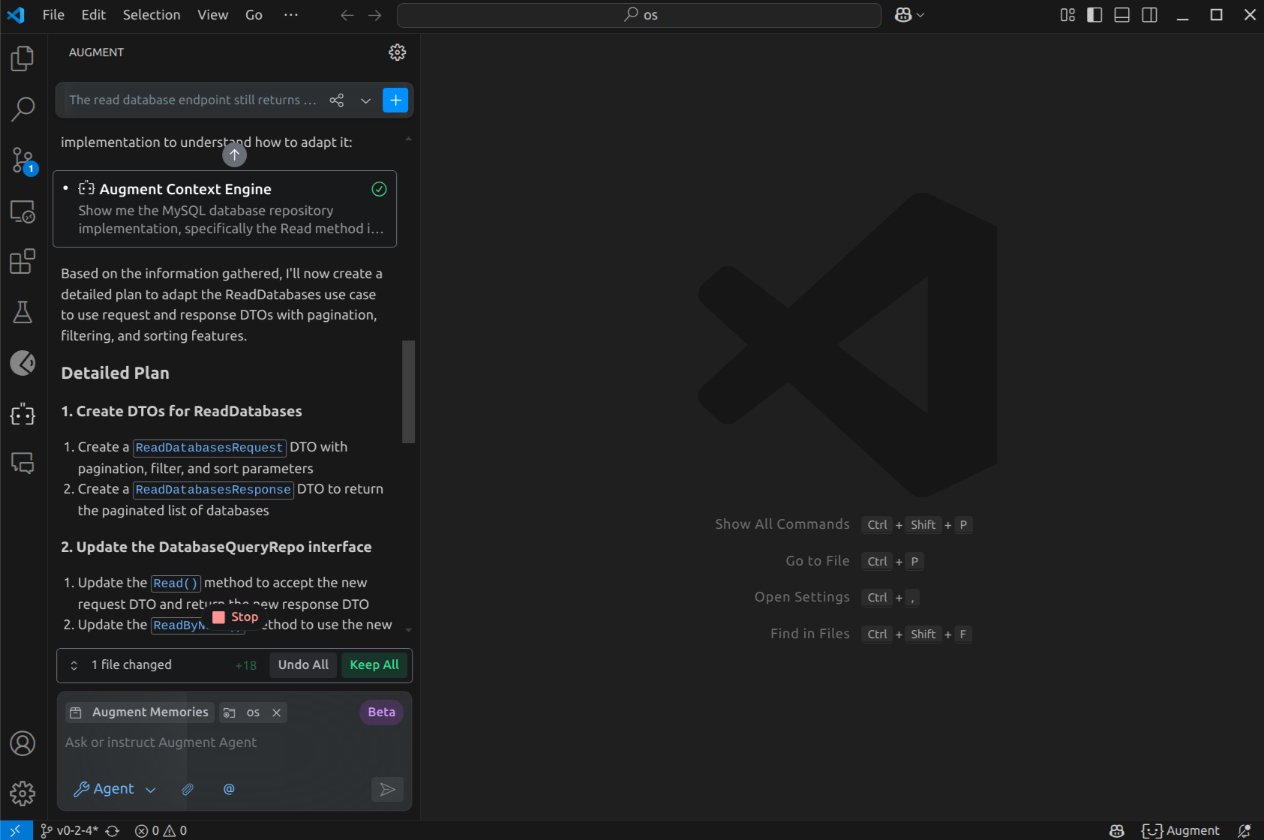

It's possible that the Agent performs faster on weekends, as it quickly responded to the prompt (detailed in the previous article) and initiated the "Context Engine", elaborating a "Detailed Plan".

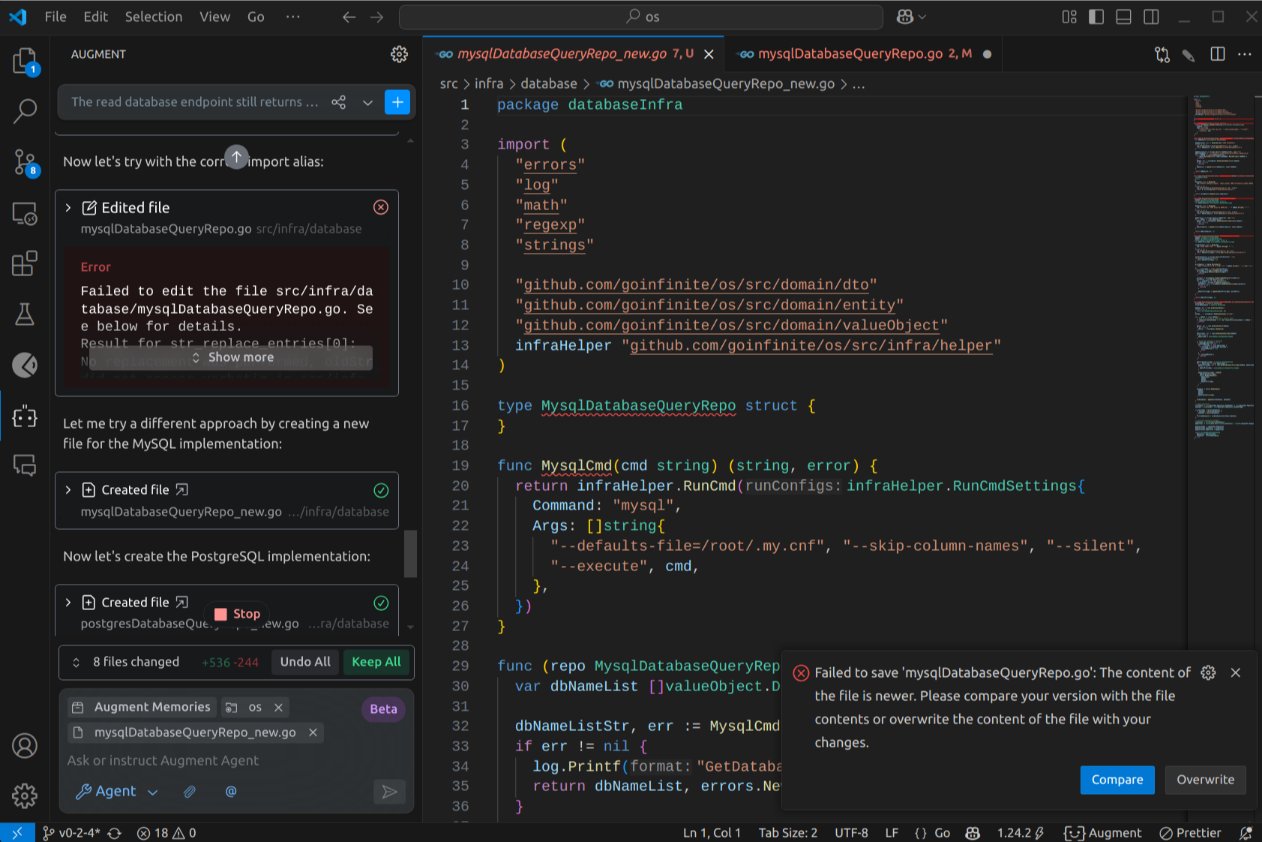

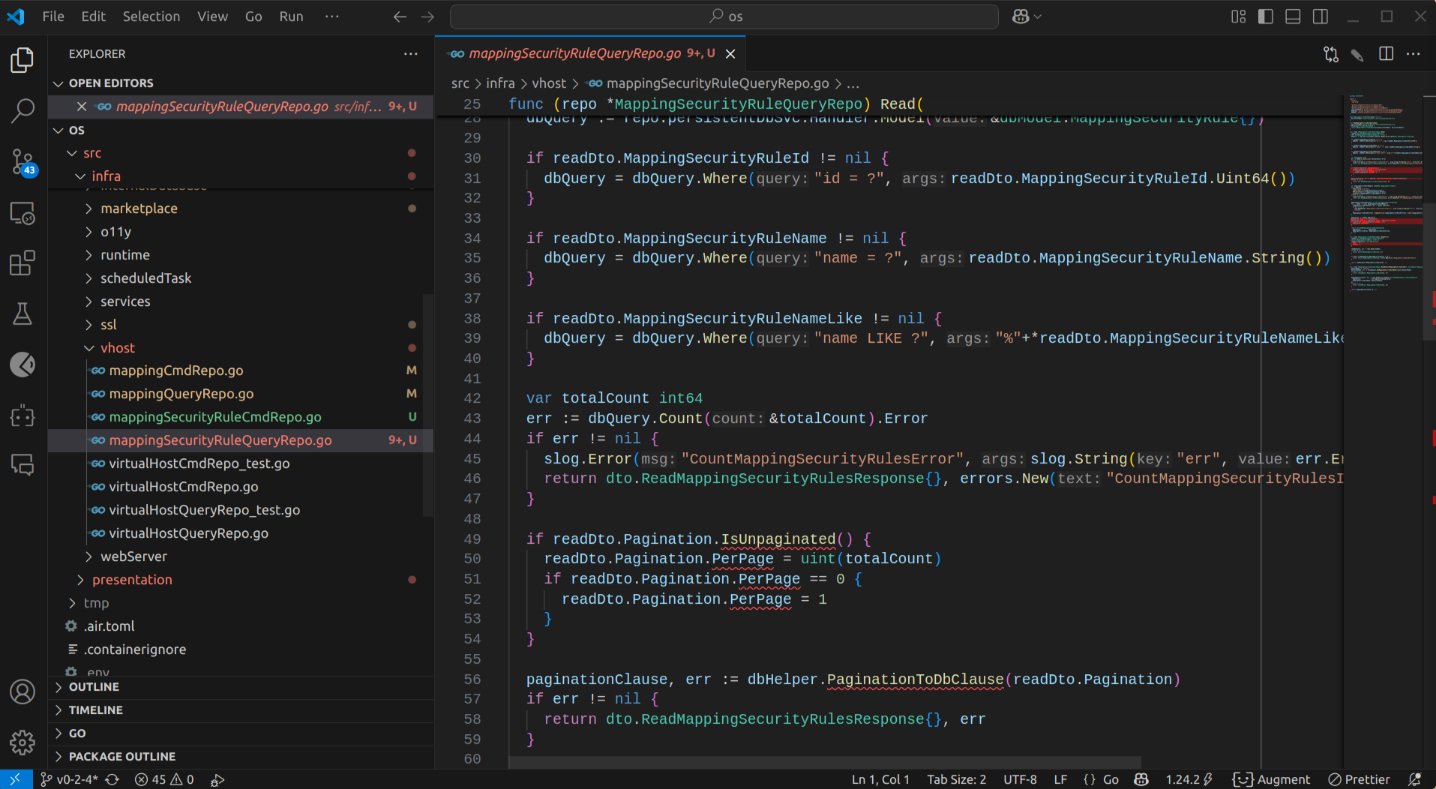

Augment successfully created the DTOs and use case, including the addition of DatabasesDefaultPagination. Interestingly, it scanned 50 lines of code at a time and persisted despite encountering some issues. Notably, when it faced errors with a particular file, it created a new file with a _new suffix, which differed from the approach taken by other tools:

Currently, Augment offers unlimited agent calls during its beta phase, akin to Copilot Agent Mode. However, rather than escalating uncontrollably, Augment concluded the task after 10 file modifications. I then proceeded to examine the results in detail, file by file. Here are the initial errors identified:

- The

databaseQueryRepointerface retained theReadByName()method; - Filtration and pagination were applied within the MySQL and PostgreSQL implementations instead of utilizing the main

Read()method, leading to code duplication; - The PostgreSQL query implementation was not rewritten using new libraries as Cursor had attempted, but the SQL queries underwent significant changes, AND a function named

UserExists, used by the command repository, was removed; - The unit tests for the MySQL and PostgreSQL implementations were not adjusted to reflect the changes.

Clearly, the task was not completed to my expectations in a single pass. The agent left a portion of the code in a broken state. However, it had, thus far, performed more effectively than any other tool I had trialed. Several of the issues were readily apparent to the compiler, suggesting that the Augment team might benefit from incorporating a check of the language server output.

Intrigued by its progress and the relative ease of rectifying most of the errors, I was curious to see how far it could go. Consequently, I issued a second and a third prompt to refine the output. On occasion, the agent provided somewhat misleading information, but at other times, it introduced rather neat improvements, such as enhancing the regex pattern of a parser:

Ultimately, it did produce compilable code. To be frank, despite having prior indications of its differentiated performance compared to other AI agents, I was somewhat surprised that it managed to deliver code that could be compiled.

Admittedly, its modifications to the code did break the PostgreSQL connection, rendering the listing of users non-functional. However, I was able to resolve these issues within a few minutes. The MySQL implementation, on the other hand, was functioning correctly.

The process resulted in 16 file modifications. Most required some degree of adjustment, often minor refinements. This task, which might have occupied a junior developer for 6-8 hours, or perhaps a mid-level developer for around 6 hours, took me just over an hour, including the time taken to document the experience in this article.

Plan Usage and Junior Developers

Prior to commencing this task, my usage statistics stood at 15 chat messages, 10 user agent requests, and 80 user agent tool uses. Upon completion of the second task, these figures had increased to 22, 15, and 160 respectively.

Currently, chat, completions, and tool usage are unlimited, and there appears to be no immediate indication that this will change. Augment's business model appears to be significantly predicated on agent calls. As previously mentioned, these are unlimited under the Developer plan ($30), although the plan page features a struck-out text indicating "500 requests per month" and "$11 per 100 additional requests".

If you're willing to allow the AI to be trained on your code, you can use the free Community plan, which limits you to 50 agent calls and 3,000 chat messages, with an additional "$9 per 100 additional requests".

The task required only 5 agent requests to complete. On the free plan, this efficiency translates to approximately 10 similar tasks within the monthly allocation, with the option to extend to around 30 tasks for an additional $9. For developers with moderate needs, this pricing structure offers significant value compared to alternatives.

Should Augment adhere to the $30 plan with 500 requests per month, and as long as you craft your prompts with rich details, this allocation could enable a developer to complete approximately 100 tasks. This is a significant bargain compared to alternatives like Windsurf.

My experience so far has convinced me to migrate away from Copilot. However, I'm unsure about the suitability of Augment for junior developers. Typically, they do not exhibit the same level of attention to architectural considerations and planning as their more senior counterparts.

In my observation, even with repeated guidance, junior developers tend to revert to a more code-centric and implementation-focused mindset, rather than prioritizing business logic and pseudo-code.

One could consider establishing a "prompt approval pipeline" overseen by more senior developers, but this arguably borders on micromanagement. If a senior developer is required to comprehend the business logic and approve the prompts, one might question the primary purpose of having a junior developer in the first instance.

I won't delve deeper into this topic, as it deserves a separate article. However, I foresee junior developers quickly exhausting their request limits without generating significant value. If I were on a budget constraint, I would consider keeping junior developers on Supermaven or Cursor, or using the Community free plan (if AI training is not a concern), until they demonstrate the maturity to effectively utilize the Developer plan.

Task 3: New Feature

This particular task represents a more significant test, offering a clearer indication of the agents' true maturity. Upon initiating the task, the usage stood at 24 chat messages, 17 user agent requests, and 178 user agent tool uses.

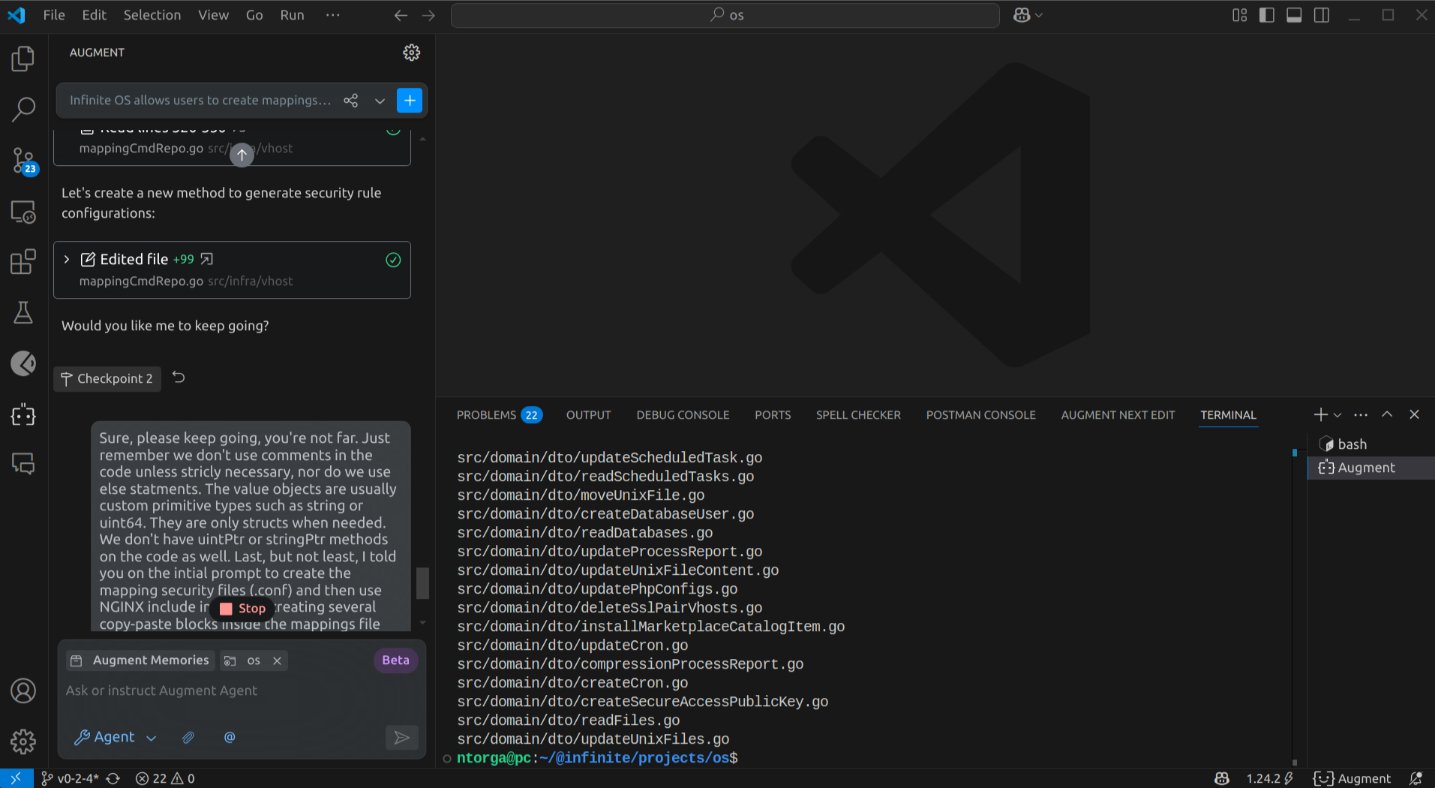

After running and modifying 23 files, it paused and inquired: "Would you like me to keep going?". This likely represents a safeguard to prevent the agent from spiraling into uncontrolled modifications. I reviewed the changes made thus far and gave my approval, along with some supplementary notes based on its progress:

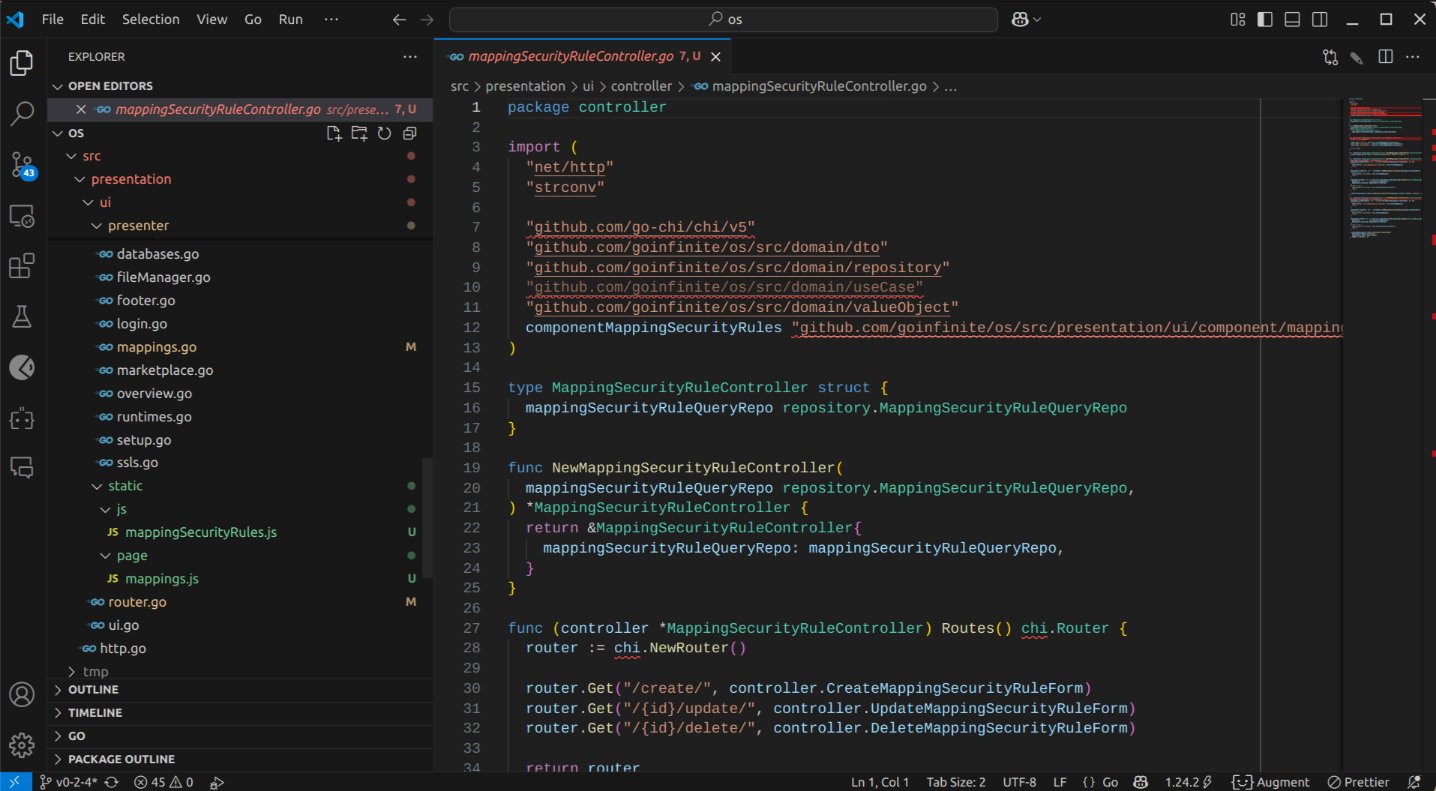

This presents an interesting scenario, as other agents have a tendency to lose their train of thought when interrupted. Despite not having completed its run, I observed it working on the user interface (UI), which in itself is a more significant step than many other agents have managed. It retained the need to address the UI, even after pausing to seek my continuation.

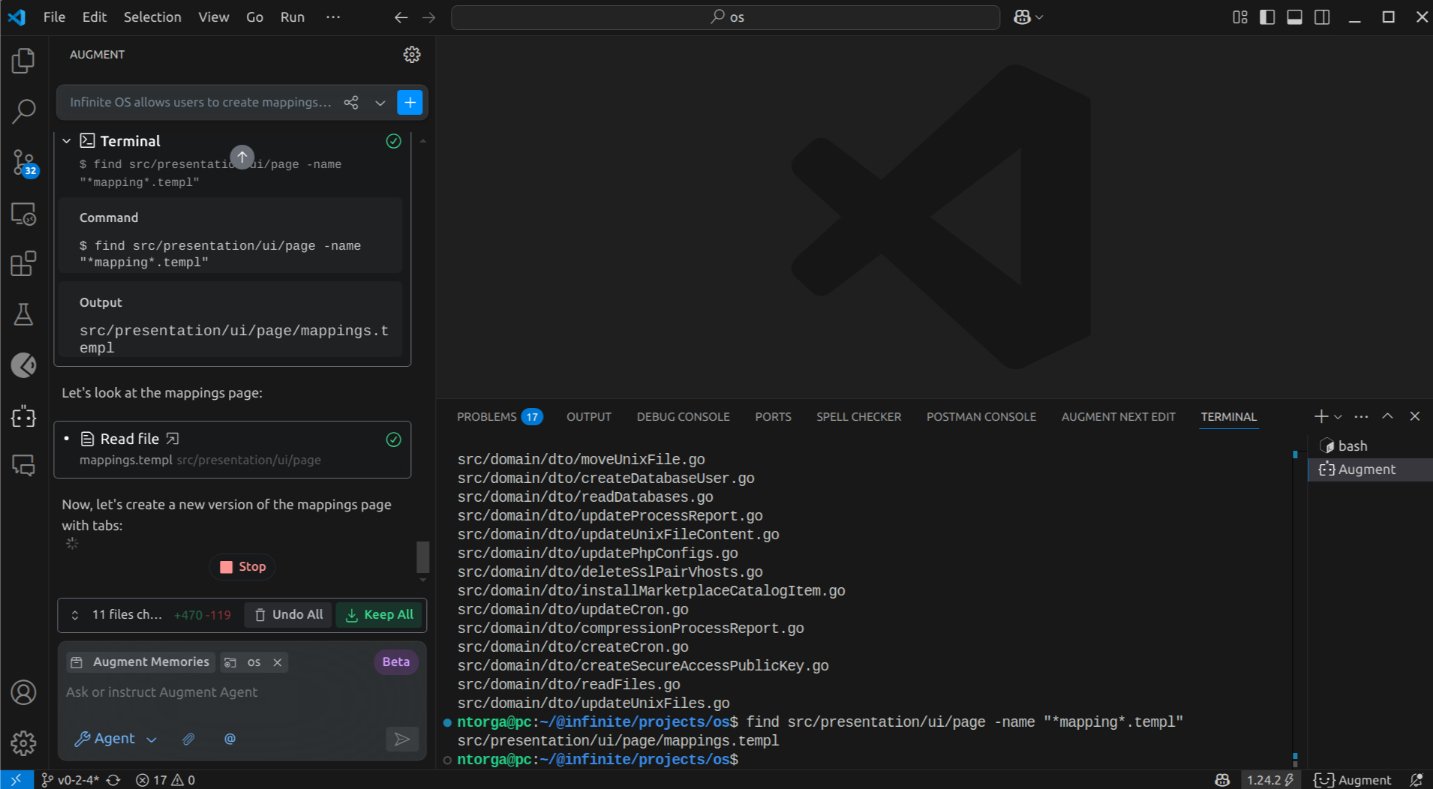

Our front-end architecture is not your run of the mill React Single Page Application (SPA). Instead, it employs a blend of a-h/templ with Alpine.js, HTMX, and Tailwind CSS. The agent's ability to work within this framework, despite the likely scarcity of online examples, is rather intriguing. By this stage, 37 files had been modified.

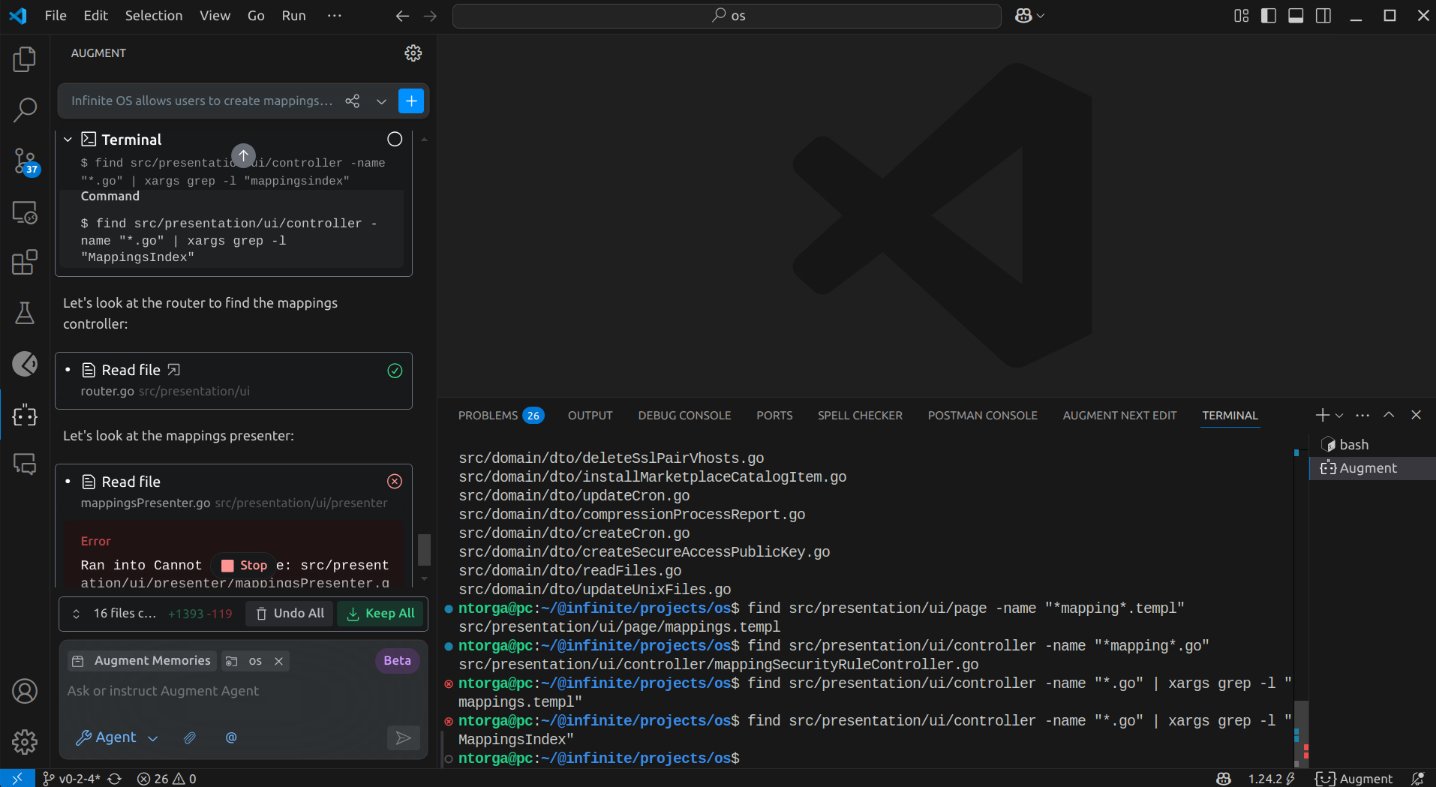

The agent attempted to locate the controller for the Mapping UI section, and after two unsuccessful attempts with find commands it prepared, it reverted to the UI's router.go file. It then realized it was searching for presenters rather than controllers, which is another peculiarity of our front-end structure. It again requested confirmation to proceed, and at this point, my primary instruction was simply to continue.

A total of 40 files had now been modified. The agent declared that all intended actions were complete. The moment of truth had arrived. Had it been successful? The complexity of this test case provided a meaningful opportunity to evaluate the tool's capabilities in a challenging scenario.

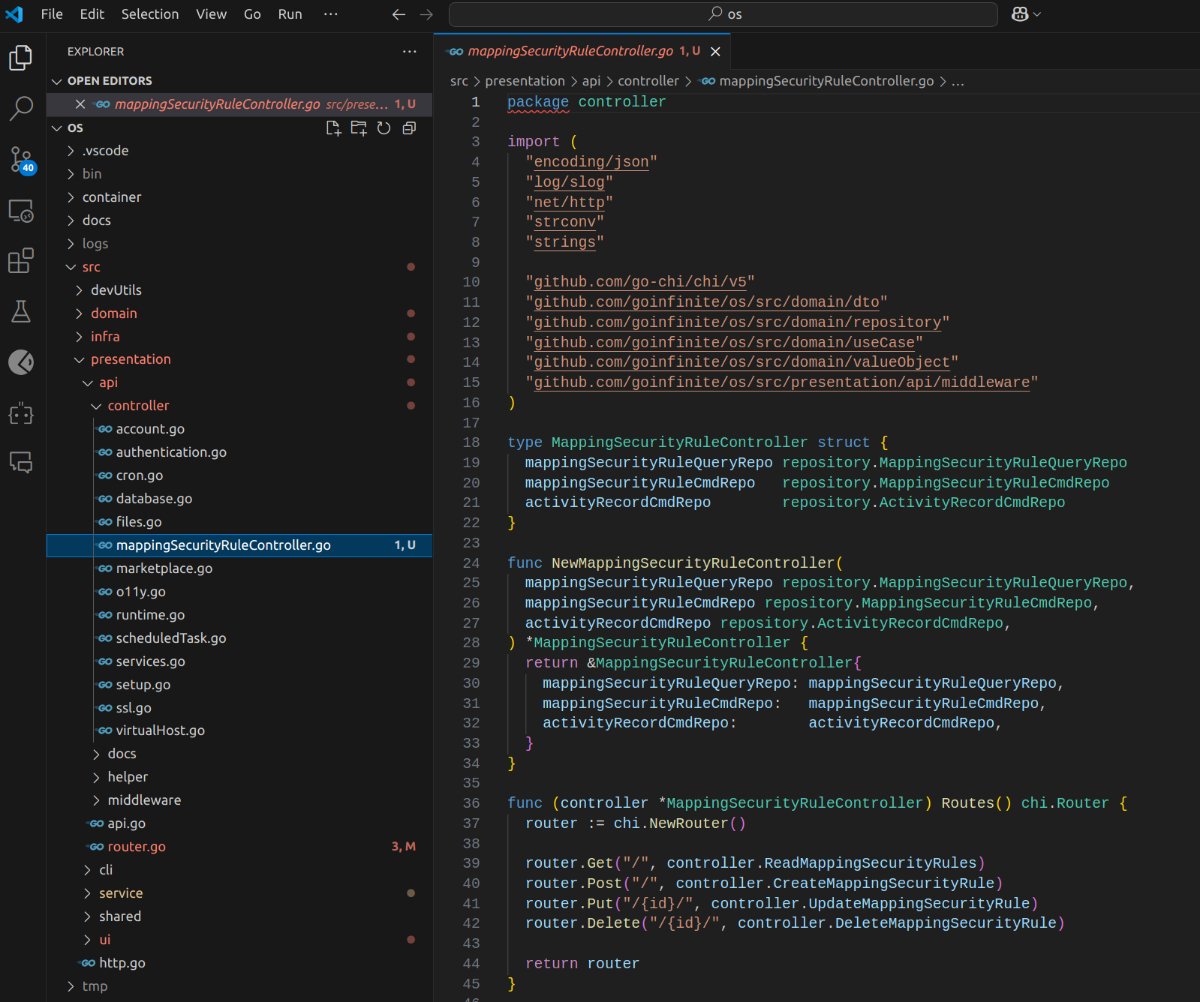

Upon reviewing the code, I was greeted by a plethora of red text in the VSCode file browser, indicating errors. Not entirely unexpected, given the complexity of the task.

Let's begin our assessment from the API layer. Oh dear, it appears to have introduced the Chi router into the controller. Our project utilizes an entirely different framework (Echo)! This is not an auspicious start.

The usage statistics now stood at 26 chat messages, 21 user agent requests, and 300 user agent tool uses. It accomplished a substantial amount of work for just 5 agent calls, for which it certainly deserves credit. As noted in the first article, this task is far from trivial and would likely be allocated a full week's work for a mid-level developer.

I proceeded to examine the generated code to ascertain whether it could at least serve as a foundation for completing the task myself. While the API layer appeared problematic, what about the domain layer?

The Data Transfer Objects (DTOs) were generally acceptable, with the exception of BandwidthLimit, which ideally should have utilized the Byte value object. Otherwise, the DTOs and entities were satisfactory. Regarding the repository interfaces, I might have opted to extend the existing mapping interfaces rather than creating new ones, but this is a minor point.

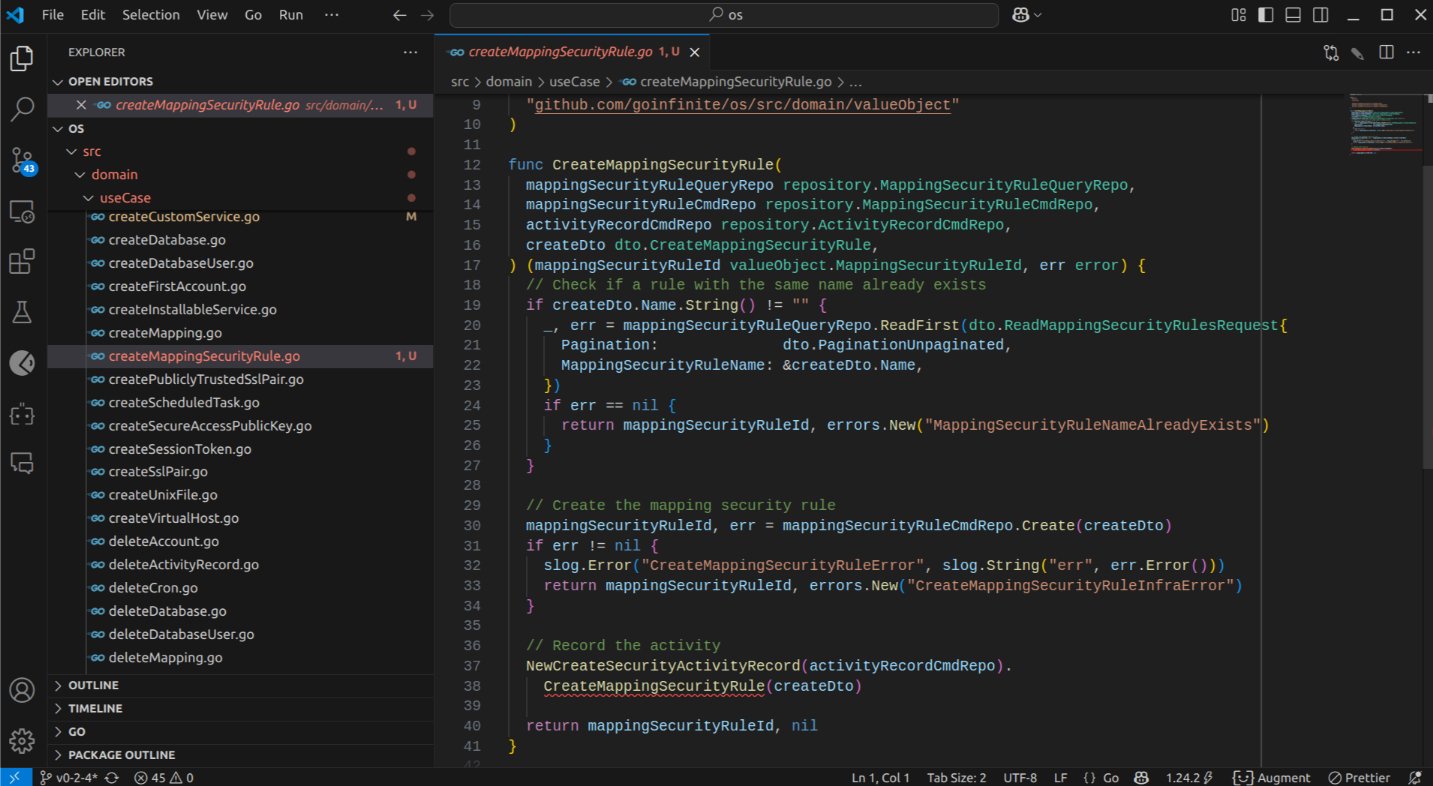

The use case, however, presented a more significant deviation. It assumed a constraint against repeated rule names and employed comments, despite my explicit instruction via the Augment Memory feature to avoid comments. It also omitted the creation of the CreateMappingSecurityRule security event altogether – another issue the language server would likely have flagged.

The value objects it generated, again, despite my explicit instruction in the second prompt to use custom types rather than structs, remained structs. This will necessitate refactoring, although it did correctly identify the naming conventions.

Returning to the presentation layer, the Command Line Interface (CLI) remained untouched – a disappointment, as it was included in the "Detailed Plan" outlined in the initial prompt. This likely represents a loss of context following the interruptions.

The presentation service layer, however, was reasonably well-executed. It correctly addressed the majority of requirements, and I could work with it without significant difficulty. Some adjustments will be necessary, but overall, it is quite acceptable.

The UI layer, on the other hand, was rather problematic. It again imported the Chi router and created a controller. Furthermore, it appeared to have mixed Go templates directly within the presenter.

It did, however, generate some template components that might be salvageable. It also created a new static directory containing JavaScript files copied from another part of the codebase. Weird. To put it mildly, the UI implementation is rough.

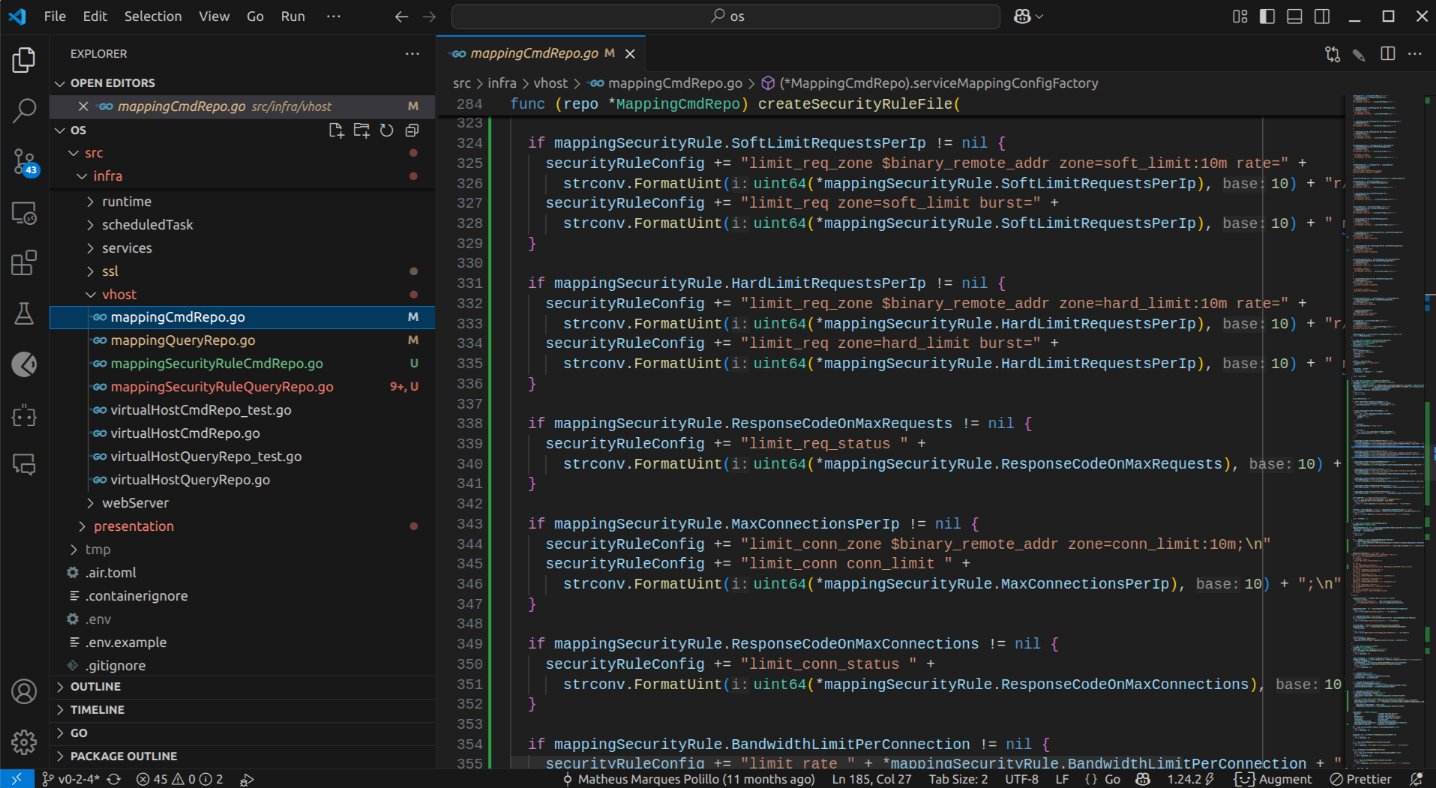

The infrastructure layer is... well... not entirely without merit. While the code is not the worst I have encountered, it will require considerable refinement. It did grasp the concept of creating individual .conf files for each rule and using include directives in NGINX.

Curiously, it performed better on the command repository implementation, which is arguably more complex, than on the query repository, where it appeared to hallucinate some helper functions.

Out of sheer curiosity, I prompted it to rectify the API controller. This proved to be a relatively straightforward task, and after analyzing some examples I provided, it performed commendably.

I might venture to suggest that with a few hours of further interaction, I could potentially bring the majority of the code to a somewhat usable state, with the notable exception of the UI, which appears to be a lost cause.

In any event, it took approximately one to two hours for the agent to generate this substantial amount of code, and more than half of it is potentially salvageable. This is a rather impressive output. Given its 65.4% score on the SWE-bench, it is perhaps fitting that this more complex task appears to have yielded roughly 65.4% of the required code.

I had not even planned to work on the Mapping Security Rule feature imminently, but given the significant head start provided by Augment, I may well undertake it this week.

Conclusion

Augment has made significant progress on the second task, completing around 90%, and has also achieved approximately 60% completion on the third. In comparison, Cursor and Windsurf have barely scratched the surface of these tasks, as seen in the first article. This gives Augment a notable advantage, particularly as it currently offers competitive pricing.

Are AI agents ready to replace software developers entirely? Not in the slightest, and I am reasonably confident that we remain a year or two away from a point where genuinely complex codebases can be created without direct human intervention, particularly when dealing with less mainstream languages and frameworks.

It is crucial to note that I did not suggest the replacement of developers, but rather the creation of code without direct manual manipulation. The architectural design and planning phases remain significant undertakings that will likely continue to be the domain of human expertise for some time yet.

Indeed, thoroughly planning is where developers should increasingly focus their efforts, whether it aligns with their current inclinations or not. The future trajectory for developers undoubtedly lies in software architecture.

The performance demonstrated in these tests suggests that tools like Augment represent a significant advancement in AI assistance for software development. Organizations and individual developers may want to consider how these capabilities could be integrated into their workflows to maintain competitive productivity.

While certainly not without its imperfections, the sheer volume of tedious, repetitive work that it alleviates is truly remarkable. Intelligent auto-completion tools have already been shown to increase developer productivity by around 30%, according to some studies.

Based on these tests, enterprise-focused AI agents like Augment demonstrate potential to substantially increase developer productivity, possibly doubling output in certain scenarios when compared to traditional development approaches.

To tackle the two complex tasks outlined, Augment utilized a mere 5 agent calls per task. I anticipate that it would likely require an additional 10 to 20 agent calls to fully refine the generated code, should I choose to pursue that.

This figure remains within the bounds of the free plan's limitations and represents only a tenth of the allocation under the Developer plan. Should I proceed with this refinement, I shall certainly share the final agent call count on LinkedIn.

Augment does require further refinement in several key areas:

- Enhanced integration with language servers is necessary to identify incomplete code without relying solely on the models' inherent intelligence;

- The review process also requires considerable development, although overall, their current capabilities place them in a league of their own. Tools such as Cursor and Windsurf are beginning to feel somewhat antiquated by comparison;

- Context retention between prompts needs improvement, addressing instances where aspects planned in the "Detailed Plan" are not completed, or where explicitly prohibited actions are nonetheless undertaken.

The company's clear focus on context, the strategic combination of multiple models for robust planning, and the abstraction of much of the underlying AI complexity suggest a long-term vision. Their leadership team's background from a strong engineering culture (PureStorage), whose work I have admired since their involvement with Portworx, is also encouraging.

I sincerely hope they maintain the cost-effectiveness of the project and avoid adopting an "enterprise schedule a demo" model with pricing beyond the reach of most individual developers.

Comments